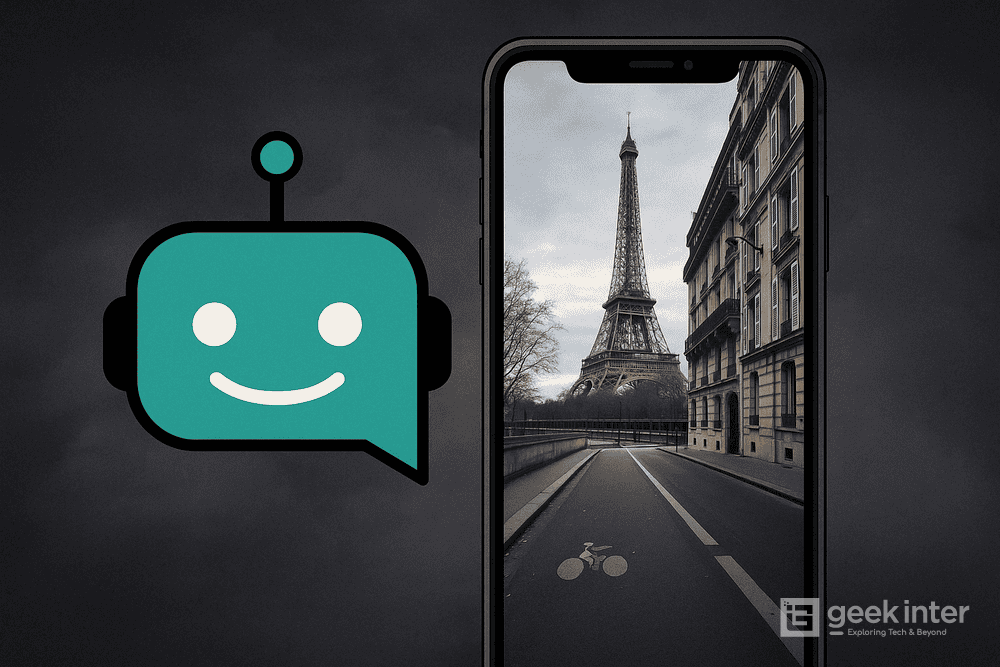

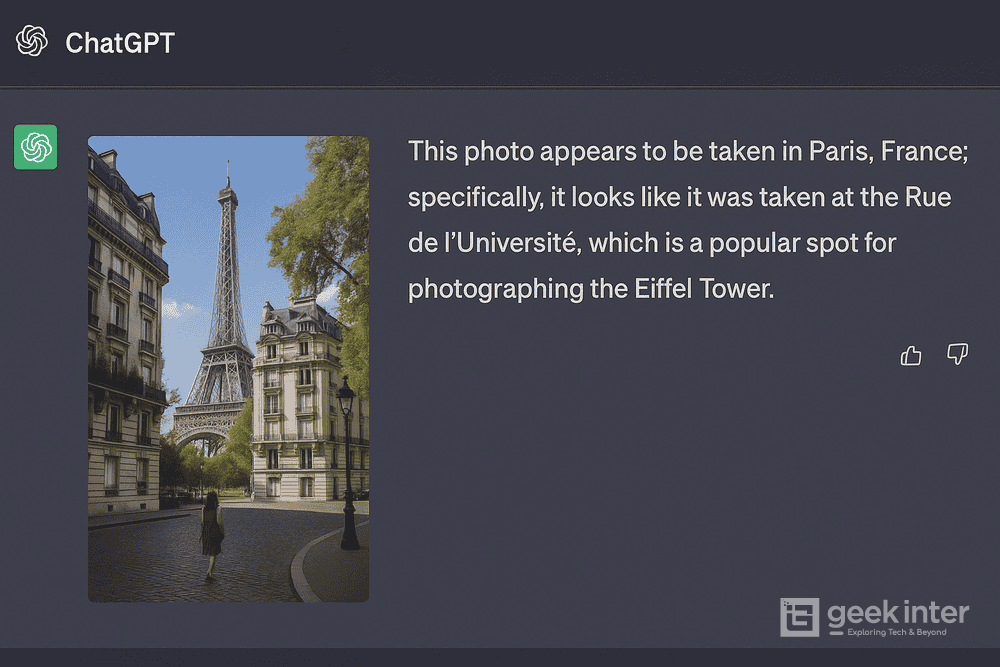

We’ve all done it — shared a meal pic, a vacation snap, or a quick street shot without thinking twice. But what if an AI could accurately guess where it was taken… just from the photo?

That’s no longer science fiction.

Thanks to OpenAI’s latest image-capable models, ChatGPT (with o3 and o4-mini) has quietly developed an uncanny ability: geolocating photos based on visual cues alone.

How It Works: AI Is Learning to Read the World

The o3 model — available to GPT-4 subscribers — can now zoom, crop, rotate, and dissect images at a level previously reserved for human analysts or high-end military systems.

Using visual data like:

- Street signs

- Pavement markings

- Menus

- Architectural styles

…it can triangulate not just the country or city, but often the exact location a photo was taken.

In multiple public tests, users uploaded blurry, mundane snapshots — like a storefront or plate of food — and ChatGPT accurately identified the city, district, or even the specific restaurant.

Cool Trick or Privacy Nightmare?

On the surface, it’s fun.

You send a pic, the AI guesses “Paris” or “Tokyo” and you’re impressed. But the same tech can be weaponized for reverse location tracking, even when people don’t want to be found.

What’s stopping someone from:

- Uploading a stranger’s Instagram photo

- Asking ChatGPT, “Where is this?”

- Using that data for stalking or surveillance?

The answer, right now: not much.

No Consent Needed, No Barriers in Place

Unlike Google’s reverse image search or EXIF data tools — which rely on metadata — ChatGPT’s image model infers location purely from visual information.

There are no permissions, no warnings, and no opt-out.

And while ChatGPT sometimes gives vague or incorrect answers, it has shown remarkable accuracy in early testing — especially with o3’s enhanced reasoning and pattern recognition.

In some cases, the AI even recognized signage in non-Latin alphabets and correctly deduced the country.

The Tech Behind It Isn’t the Problem. How We Use It Is.

Let’s be clear: this kind of image inference isn’t new. Google, Meta, and others have long had similar internal tools.

What’s new is how easily it’s now available to anyone — with zero oversight.

OpenAI hasn’t announced formal restrictions or safety checks for this use case. That raises serious questions:

- Should image geolocation require opt-in consent?

- Should AI responses be restricted if location inference is detected?

- How will this affect journalists, activists, or public figures who rely on location privacy?

GeekInter’s Take:

This is one of those AI breakthroughs that blurs the line between “wow” and “wait, what?”

On one hand, it shows how far multimodal AI has come. On the other, it quietly introduces a new vector for privacy invasion.

If OpenAI wants to keep public trust — and avoid future headlines involving misuse — it needs to:

- Set clear guidelines for image-based location inferences

- Allow users to control whether AI can analyze location cues in their images

- And maybe, just maybe…

put a little friction between uploading a selfie and revealing someone’s exact coordinates

Because right now? The tech’s a little too smart for its own good.